Short answer: OpenAI’s business model canvas is built around AI platforms (ChatGPT & APIs), enterprise partnerships, subscription revenue, and developer ecosystems, supported by massive infrastructure and research investment.

Instead of explaining OpenAI in abstract terms, this article breaks it down using the Business Model Canvas framework, one block at a time.

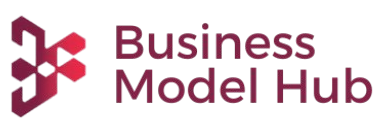

What Is the Business Model Canvas?

The Business Model Canvas is a strategic management tool that maps out how a company creates, delivers, and captures value across nine building blocks. Think of it as a one-page blueprint of a business.

For understanding OpenAI, this framework is particularly useful because it cuts through the AI hype and shows the actual business mechanics: who pays, what they get, and how the whole machine runs.

This framework simplifies complex AI businesses by forcing clarity on each component from customer segments to cost structure making it easier to see both the strengths and vulnerabilities of the model.

Customer Segments: Who OpenAI Serves

OpenAI doesn’t serve just one type of customer it’s built a multi-sided platform that targets four distinct segments:

Individual consumers use ChatGPT for everything from writing emails to brainstorming ideas. These are everyday users who interact with AI through a simple chat interface, often starting with the free tier before upgrading to paid plans.

Developers and startups integrate OpenAI’s APIs into their own products. They’re building the next generation of AI-powered applications everything from customer service bots to content generation tools using OpenAI’s models as the engine.

Enterprises and large organizations need AI at scale with security, compliance, and customization. Think Fortune 500 companies integrating AI into their workflows, customer operations, and internal tools.

Platform partners like Microsoft don’t just use OpenAI—they distribute it. These partnerships amplify OpenAI’s reach by embedding its technology into existing ecosystems that already have millions of users.

Value Propositions: What OpenAI Offers

OpenAI’s value proposition varies by customer segment, but all center on accessible, powerful AI:

AI assistants for productivity and creativity help users write, code, analyze, and create faster than ever before. The value isn’t just automation—it’s augmentation of human capability.

Developer APIs for building AI products give builders access to cutting-edge models without needing to train their own. This democratizes AI development, letting small teams compete with tech giants.

Enterprise-grade AI tools come with the security, reliability, and customization that large organizations require. It’s the same powerful models, but with enterprise SLAs, data privacy controls, and dedicated support.

Scalable, continuously improving models mean customers aren’t just buying today’s technology—they’re getting automatic upgrades as OpenAI’s research advances. The product literally gets better over time.

Channels: How OpenAI Reaches Users

OpenAI’s go-to-market strategy uses multiple distribution channels, each optimized for different customer segments:

ChatGPT web and mobile apps provide direct consumer access with minimal friction. Download the app or visit the website, and you’re using AI in seconds. This channel drives massive user acquisition and brand awareness.

API platforms and documentation serve developers who need technical integration. Comprehensive docs, code examples, and developer-friendly pricing make it easy to start building.

Enterprise sales and partnerships involve direct outreach, custom contracts, and relationship management for large deals. This is high-touch, consultative selling for organizations with complex needs.

Platform integrations through the Microsoft ecosystem put OpenAI’s technology inside tools people already use daily—Office, Teams, Azure. This passive distribution channel is extraordinarily powerful.

Customer Relationships: How OpenAI Retains Users

Different customer segments require different relationship strategies:

Self-serve usage for ChatGPT means users can start, use, and upgrade without ever talking to a human. The product itself drives retention through usefulness and habit formation.

Subscription-based engagement creates recurring touchpoints. Monthly or annual billing keeps users conscious of value while creating predictable revenue streams.

Developer support and documentation build community and reduce friction. Forums, guides, and responsive support help developers succeed, which keeps them building on OpenAI’s platform.

Enterprise onboarding and account management provide white-glove service for high-value customers. Dedicated teams ensure successful implementation and ongoing optimization.

Revenue Streams: How OpenAI Makes Money

OpenAI’s monetization strategy is sophisticated, combining multiple revenue models:

ChatGPT Plus and Pro subscriptions generate predictable recurring revenue from individual users. At $20-$200 per month, these plans offer enhanced features, priority access, and higher usage limits.

API usage-based pricing charges developers per token processed. This consumption-based model scales with customer success—the more value they get, the more they pay.

Enterprise licensing and contracts involve custom pricing for large organizations. These deals can range from hundreds of thousands to millions annually, often including minimum commitments.

Strategic partnerships and platform revenue through deals like Microsoft create both upfront payments and ongoing revenue sharing. Microsoft’s reported $10+ billion investment includes access rights that generate continuous value.

Key Resources: What OpenAI Relies On

OpenAI’s competitive advantage rests on several critical resources:

AI models and research IP are the crown jewels. GPT-4 and future models represent years of research investment and architectural innovations that competitors struggle to replicate.

Massive compute infrastructure powers model training and inference. The computational resources required to train and run these models at scale represent both a moat and a massive capital requirement.

Talent in AI research and engineering is irreplaceable. OpenAI attracts top researchers who push the boundaries of what’s possible, maintaining technical leadership.

Data, training pipelines, and cloud partnerships enable continuous improvement. The systems that collect feedback, retrain models, and deploy updates are as valuable as the models themselves.

Key Activities: What OpenAI Does Daily

The company’s core operational activities span research, development, and safety:

Model research and training involve constant experimentation to improve capabilities, efficiency, and reliability. This is the engine that keeps OpenAI ahead of competitors.

Product development for ChatGPT, APIs, and new features translates research breakthroughs into usable products. It’s the bridge between cutting-edge AI and practical applications.

Safety and alignment work ensure models behave as intended and minimize harmful outputs. This isn’t just ethics—it’s essential for maintaining trust and avoiding regulatory crackdowns.

Platform scaling and optimization keep services running smoothly as usage explodes. Performance engineering, cost optimization, and reliability work happen continuously behind the scenes.

Key Partnerships: Who Powers OpenAI

OpenAI’s ecosystem depends on strategic partnerships:

Microsoft is the most critical partner, providing cloud infrastructure through Azure, distribution through its product ecosystem, and billions in capital investment. This partnership gives OpenAI scale it couldn’t achieve alone.

Enterprise customers aren’t just buyers—they’re partners who provide feedback, use cases, and validation. Their needs drive product development and create case studies that attract more customers.

Platform developers building on OpenAI’s APIs become stakeholders in its success. They create applications that make OpenAI’s models more valuable, driving a network effect.

Infrastructure and data partners supply the computational resources and training data needed to maintain competitive models. These relationships are foundational to operations.

Cost Structure: Where OpenAI Spends Money

Running OpenAI requires massive, ongoing investment:

Cloud compute and model training represent the largest cost center. Training GPT-4 reportedly cost over $100 million, and serving billions of requests requires continuous infrastructure spending.

Research and safety teams employ some of the world’s most expensive technical talent. Top AI researchers command compensation packages that rival executive pay.

Infrastructure and scaling costs go beyond compute to include networking, storage, monitoring, and all the engineering required to run services at global scale.

Compliance and governance spending increases as AI regulation evolves. Legal teams, policy experts, and compliance infrastructure are necessary costs of operating responsibly.

Strategic Insights from OpenAI’s Canvas

Several strategic patterns emerge from analyzing OpenAI’s business model:

Why OpenAI mixes B2C and B2B: Consumer products like ChatGPT build brand awareness and generate feedback data, while B2B offerings (APIs and enterprise) generate higher margins and revenue predictability. Each reinforces the other.

Platform leverage through APIs: By letting developers build on its infrastructure, OpenAI multiplies its value without building every application itself. The API strategy creates a moat—switching costs increase as developers invest in integration.

Subscription plus usage hybrid monetization: This combination captures value from both casual users (subscriptions) and power users (API consumption). It balances revenue predictability with upside as usage grows.

Infrastructure dependency as a trade-off: Relying on Microsoft’s Azure provides scale and capital but creates vendor lock-in and profit-sharing obligations. It’s a calculated trade-off between speed to market and long-term margin control.

What Founders Can Learn from OpenAI’s Business Model

OpenAI’s canvas offers lessons for any builder:

Platform thinking beats single-product thinking. OpenAI doesn’t just sell ChatGPT—it enables an ecosystem. Your product might be the center, but the platform around it creates defensibility.

Hybrid monetization works at scale. Don’t pick just subscriptions or usage-based pricing. Layer multiple revenue models to capture different customer segments and use patterns.

Partnerships can be growth engines. The Microsoft partnership gave OpenAI distribution, infrastructure, and capital it would have taken years to build independently. Strategic partnerships accelerate what you can’t do alone.

Trust and usability drive adoption. In a market full of AI hype, OpenAI wins by being reliable and easy to use. The best technology doesn’t matter if users don’t trust it or can’t figure it out.

Final Take: Is OpenAI’s Business Model Defensible?

Strengths of the canvas: OpenAI has built multiple moats—technical leadership, brand trust, developer ecosystem, and strategic partnerships. The business model generates revenue from multiple streams while continuously improving the core product.

Risks and dependencies: Heavy reliance on Microsoft creates strategic vulnerability. Massive compute costs compress margins. Competition from Google, Anthropic, and open-source alternatives is intensifying. Regulatory uncertainty could reshape the entire playing field.

Long-term sustainability: The model works if OpenAI maintains technical leadership and finds paths to improve unit economics. As models become more efficient and competition drives API pricing down, the company will need to move up the value chain either through more sophisticated enterprise offerings or by capturing more value from the applications built on its platform.

The canvas reveals a company that’s successfully commercialized cutting-edge research, but one that still faces the fundamental challenge of all platform businesses: staying ahead of commodification while managing the costs of innovation.

Discover more from Business Model Hub

Subscribe to get the latest posts sent to your email.