Short answer: Anthropic’s business model is built around enterprise grade AI safety, paid API access, and premium AI services, with a strong focus on responsible deployment and long-term enterprise trust.

While competitors race to release flashier features and chase viral consumer adoption, Anthropic has taken a different path: becoming the AI provider that businesses actually trust with their most critical operations.

To understand Anthropic’s business model, we need to look at why it prioritizes safety, how Claude is positioned for businesses, and where revenue actually comes from.

What Is Anthropic?

Anthropic was founded in 2021 by former OpenAI researchers, including siblings Dario and Daniela Amodei, along with a team of AI safety experts who wanted to take a more cautious approach to artificial intelligence development.

The company’s mission is straightforward: build safe, reliable, and aligned AI systems that organizations can depend on without constant worry about unpredictable behavior or reputational risk.

Why Claude stands out in the AI market:

Claude isn’t designed to be the most attention-grabbing AI assistant. Instead, it’s built to be thoughtful, nuanced, and reliably safe. The model excels at understanding context, following instructions carefully, and producing outputs that align with user intentions. For businesses handling sensitive information or operating in regulated industries, these qualities matter far more than being able to go viral on social media.

Anthropic’s Core Value Proposition

Anthropic’s value proposition can be summed up in one phrase: AI you can trust in production.

Safety-first AI design: Every decision in Claude’s development prioritizes reducing harmful outputs and increasing alignment with human values. This isn’t just an ethical stance—it’s a competitive advantage.

Constitutional AI approach: Without getting too technical, Constitutional AI means Claude is trained using a set of principles (a “constitution”) that guide its behavior. Instead of relying solely on human feedback for every decision, Claude learns to self-critique and improve based on these foundational principles. The result is more consistent, predictable behavior across diverse use cases.

High-quality reasoning and long-context understanding: Claude processes information thoughtfully rather than just pattern-matching. The models can handle extensive context windows, meaning they can work with large documents, codebases, or conversation histories without losing track of important details.

Enterprise-ready reliability: Claude is built for production environments where consistency matters. Businesses don’t want an AI that works brilliantly 95% of the time but occasionally produces embarrassing or dangerous outputs the other 5%.

Who it’s built for:

Anthropic has been explicit about its target users. Claude is designed for businesses, developers building AI-powered products, and enterprises handling sensitive data. If you’re a healthcare provider analyzing patient records, a law firm reviewing contracts, or a financial institution processing customer queries, Anthropic wants to be your AI partner.

Target Customers

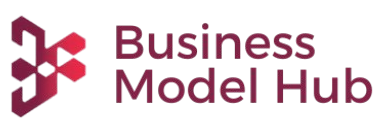

Anthropic focuses on four key customer segments:

Developers building AI products: Software engineers and product teams integrating AI capabilities into their applications via API. These customers need reliable, well-documented models they can build on without constantly firefighting unexpected behaviors.

Startups integrating AI via APIs: Early-stage companies using AI as a core component of their value proposition. They need affordable access to cutting-edge models but also require the safety and reliability that will help them secure enterprise customers down the line.

Large enterprises: Fortune 500 companies and established organizations that need AI solutions at scale. These customers prioritize security, compliance, and predictability over cutting-edge features.

Regulated industries: Organizations in finance, healthcare, legal, and government sectors where AI mistakes can have serious regulatory or legal consequences. These industries are willing to pay premium prices for AI they can actually deploy without excessive risk.

Anthropic Business Model Explained

Enterprise-First AI Model

Unlike OpenAI, which initially focused on consumer adoption with ChatGPT before pivoting to enterprise, Anthropic started with businesses in mind. The strategy is clear: focus on customers who value reliability and safety over mass-market appeal.

This approach means slower growth initially but higher-quality revenue and stronger customer relationships. Enterprise customers sign longer contracts, have higher lifetime values, and are less price-sensitive when they find a solution that genuinely solves their problems.

Reliability and safety aren’t just nice-to-haves in this model—they’re the core differentiators that justify premium pricing.

Platform-Based Monetization

Anthropic offers Claude as a service, not as a free product subsidized by other revenue streams. While there is a free tier for testing and light usage, the business model depends on converting users to paid plans.

The company uses controlled access and usage-based pricing, meaning customers pay for what they use rather than unlimited access at a flat rate. This aligns incentives: as customers get more value from Claude, Anthropic’s revenue grows proportionally.

How Anthropic Makes Money

Anthropic’s revenue comes from four primary streams:

Paid API access (usage-based pricing): Developers and businesses pay based on how much they use Claude’s API, measured in tokens processed. Different models (Claude Opus, Sonnet, Haiku) have different pricing tiers, allowing customers to choose the right balance of capability and cost for their use case.

Claude Pro and premium subscriptions: Individual users and small teams can subscribe to Claude Pro for enhanced capabilities, higher usage limits, and priority access to new features. This provides predictable recurring revenue while also serving as a funnel for larger enterprise deals.

Enterprise contracts and long-term agreements: Large organizations sign custom contracts with committed usage volumes, dedicated support, and sometimes custom deployment options. These deals provide revenue predictability and often include premium pricing for white-glove service.

Strategic partnerships: Anthropic has partnerships with major cloud providers like AWS and Google Cloud, which integrate Claude into their platforms. These partnerships expand distribution while the cloud providers likely pay for API access or revenue share arrangements.

The key insight: Anthropic’s revenue comes from trust, not virality. Companies pay because they believe Claude won’t embarrass them, leak sensitive data, or produce outputs that create legal liability. That’s a fundamentally different value proposition than “free AI that’s fun to play with.”

Cost Structure: Where Anthropic Spends Money

Running an AI company at the frontier is expensive. Here’s where Anthropic invests:

AI research and safety teams: Anthropic employs some of the world’s leading AI researchers, many with PhDs from top institutions. Competitive salaries for this talent represent a significant ongoing cost.

Model training and compute: Training large language models requires enormous computational resources. Each new version of Claude involves weeks or months of training on specialized hardware, costing millions of dollars per training run.

Cloud infrastructure: Running Claude for millions of API requests daily requires substantial cloud computing resources. Unlike some competitors, Anthropic doesn’t own its own data centers, relying instead on cloud providers.

Compliance and governance: Maintaining security certifications, conducting safety testing, and ensuring regulatory compliance requires dedicated teams and infrastructure. This is especially important for serving enterprise and regulated industry customers.

Why Anthropic Focuses on AI Safety (Strategic Advantage)

AI safety isn’t just an ethical position for Anthropic—it’s a business strategy.

Enterprise trust equals long-term revenue: Companies will pay premium prices for AI they can trust in production. One major incident with a competitor’s model can send enterprise customers looking for safer alternatives, creating opportunities for Anthropic.

Lower regulatory risk: As governments worldwide develop AI regulations, companies with strong safety practices from day one will face fewer compliance headaches and costly retrofits. Anthropic’s safety-first approach positions it well for whatever regulatory environment emerges.

Differentiation from open or experimental models: In a crowded market, “safest and most reliable” is a clear differentiator. While others compete on who can build the biggest model fastest, Anthropic competes on who businesses actually want to use.

Strong positioning for regulated markets: Healthcare, finance, and legal industries can’t just adopt whatever AI is coolest. They need solutions that meet strict compliance requirements. Anthropic’s safety focus makes it a natural fit for these high-value verticals.

Here’s my opinionated take: Anthropic recognized early that AI’s “move fast and break things” era would be short-lived. As AI moves from experimental toy to critical infrastructure, the competitive advantage shifts from innovation speed to reliability and trust. That’s a bet that looks smarter every time a competitor’s AI makes headlines for the wrong reasons.

Anthropic vs OpenAI vs DeepSeek: Business Model Comparison

Understanding Anthropic means understanding how it differs from competitors:

Openness:

- OpenAI: Started open, became increasingly closed with GPT-4

- Anthropic: Closed models, focused on API access

- DeepSeek: Open-source models, transparent research

Safety focus:

- OpenAI: Safety important but balanced with capability race

- Anthropic: Safety as core differentiator and brand identity

- DeepSeek: Research-focused, less emphasis on deployment safety

Monetization strategy:

- OpenAI: Consumer product (ChatGPT) driving brand, then enterprise

- Anthropic: Enterprise-first, API-driven revenue

- DeepSeek: Primarily research org, less clear commercial model

Target users:

- OpenAI: Mass market consumers, then businesses

- Anthropic: Developers and enterprises from day one

- DeepSeek: Researchers and technically sophisticated users

Long-term positioning:

- OpenAI: Betting on AGI timeline and being first

- Anthropic: Building sustainable business while pursuing safety

- DeepSeek: Advancing AI research with efficiency innovations

The strategic difference is clear: OpenAI wants to be the AI everyone knows, DeepSeek wants to advance the science, and Anthropic wants to be the AI that businesses actually deploy at scale.

Go-To-Market and Growth Strategy

Anthropic’s growth strategy differs significantly from typical tech startups:

Enterprise sales-led growth: Rather than relying purely on viral product-led growth, Anthropic invests in enterprise sales teams that work directly with large organizations. This is slower but results in higher-value, stickier customers.

Developer adoption via APIs: By making Claude accessible through well-documented APIs, Anthropic lets developers experiment and integrate Claude into their products. These developers become advocates and eventually enterprise buyers.

Brand positioning around safety and trust: Every piece of communication reinforces Claude’s reputation for being careful, thoughtful, and reliable. This brand equity is particularly valuable when competitors have safety incidents.

Partnerships with cloud ecosystems: Integrations with AWS, Google Cloud, and other platforms expand distribution while lending credibility. When enterprise customers already trust their cloud provider, choosing a vetted AI partner becomes easier.

Risks and Challenges in Anthropic’s Business Model

No business model is without vulnerabilities:

High compute costs: Training and running frontier AI models is extraordinarily expensive. Unlike software with near-zero marginal costs, every API call costs Anthropic real money in compute. If revenue doesn’t scale faster than usage, margins compress.

Slower adoption versus open models: Companies offering free or open-source AI can grow user bases faster. Anthropic risks being outflanked by competitors willing to operate at a loss longer or by open-source alternatives that become “good enough.”

Competition from big tech: Google, Microsoft, Amazon, and Meta have effectively unlimited resources to invest in AI. They can afford to subsidize AI products with revenue from other businesses, something Anthropic cannot do.

Balancing safety with innovation speed: Being the most cautious AI company is great for brand positioning but risky if competitors ship features that customers want and Anthropic doesn’t have. There’s a real tension between being safe and being competitive.

What Founders Can Learn from Anthropic

Anthropic’s approach offers valuable lessons for any founder:

Trust can be a monetization lever: You don’t need to be the cheapest or the flashiest. In B2B markets especially, being the most trustworthy option commands premium pricing. Anthropic proves that “safe and reliable” is a feature customers will pay for.

Premium positioning works in B2B: Rather than racing to the bottom on price, Anthropic positioned Claude as a premium product for serious use cases. This attracts higher-quality customers and builds a sustainable business model from day one.

Why not all AI products should be free: The assumption that AI must be free to compete is wrong. Free products attract users who don’t value the service enough to pay. Paid products attract customers who need the solution and will stick around.

Long-term thinking over short-term hype: Anthropic could have chased viral consumer adoption. Instead, it focused on building the foundation for a decades-long business. That’s harder and less glamorous, but ultimately more defensible.

Future of Anthropic’s Business Model

Looking ahead, Anthropic’s business model is likely to evolve in several directions:

Deeper enterprise penetration: As more enterprises adopt AI, Anthropic is well-positioned to capture share in risk-averse industries. Expect more vertical-specific solutions and deeper enterprise sales capabilities.

More industry-specific AI models: Rather than one-size-fits-all AI, Anthropic may develop specialized versions of Claude trained on industry-specific data and optimized for particular use cases (healthcare, legal, finance). These specialized models command higher prices.

Expansion of Claude ecosystem: Beyond the core API, expect tools, integrations, and products that make Claude more embedded in enterprise workflows. The recent launches of Claude Code and Claude in Chrome point to this strategy.

Potential platform dominance in regulated sectors: If Anthropic can become the default AI choice for highly regulated industries, it creates network effects and switching costs that make the position extremely defensible.

Final Verdict: Is Anthropic’s Business Model Sustainable?

Clear takeaway: Yes, Anthropic’s business model is not only sustainable but potentially more durable than more consumer-focused competitors.

The company has identified a clear value proposition (trustworthy AI for businesses), targeted customers willing to pay premium prices (enterprises in regulated industries), and built a brand around the exact qualities those customers need (safety and reliability).

Who this model is ideal for: Organizations that can’t afford AI mistakes, developers building serious products on AI infrastructure, and any business where predictability matters more than cutting-edge capabilities.

Why Anthropic is playing a long, defensible game:

The race to build AI isn’t just about who gets there first—it’s about who businesses actually want to work with when AI becomes infrastructure rather than novelty. Anthropic recognized early that the real money in AI won’t come from viral tweets about clever prompts, but from companies trusting AI with their core operations.

By focusing relentlessly on safety and reliability while competitors optimize for capability and speed, Anthropic is building exactly the moat that matters: enterprise trust. That’s the kind of advantage that’s extraordinarily hard to replicate, even with unlimited capital.

In a market where everyone is zigging toward bigger models and more impressive demos, Anthropic is zagging toward boring reliability. And boring, it turns out, is exactly what businesses want to pay for.

Discover more from Business Model Hub

Subscribe to get the latest posts sent to your email.